Your AI Pipeline Doesn't Need a Graph, It Needs a Better Runtime

You've built software systems before. You know the challenges: from flaky APIs and error handling to observability and rolling deployments. Now your team needs to ship AI features, and someone suggested LangGraph because "that's what everyone uses for agents." Six months later, you're debugging workflow state machines as you deploy new features and leave in-flight invocations in an inconsistent state. Back up. What went wrong?

Most AI applications are just API orchestration with non-deterministic, slow endpoints. The real challenges—the ones experienced engineers know how to solve—are durability, observability, and graceful evolution. Your AI pipeline doesn't need a graph; it needs the same solid engineering principles you'd apply to any distributed system.

The Zoo of AI Patterns

The AI community has developed a zoo of workflow patterns (beautifully cataloged in LangGraph's documentation). Each pattern gets its own diagram, its own abstraction, its own way of thinking:

Prompt chaining: Sequential LLM calls where each processes the previous output

Parallelisation: Multiple LLMs working simultaneously on subtasks

Orchestrator-worker: A central LLM breaking down tasks and delegating to worker LLMs

Evaluator-optimiser: One LLM generating responses while another provides feedback

Routing: Classifying inputs and directing them to specialised handlers

Agents: LLMs autonomously using tools in a loop towards a goal

But here's the thing: every single one of these patterns is just procedural code with control flow statements. Code-first implementations are described in Vercel’s AI SDK documentation.

The notable exception, present in many AI applications, is human-in-the-loop workflows where human input is interleaved with LLM invocations. More on this shortly.

The Real Problems in Production AI

While teams struggle with LangGraph's abstractions, the actual production challenges remain unsolved:

Partial Failures: Your pipeline is 15 minutes in when your service hits an out-of-memory error. You might have a checkpoint of the previous step in the database, but there’s no orchestrator to continue the workflow. The user is stuck in limbo.

Evolution Under Load: You need to add a prompt to the pipeline, or change the order of operations. However, there are invocations in flight or suspended waiting for human input. Should they run with the existing or new business logic?

Observability: When something goes wrong, you want stack traces, not state machine visualisations. You want to see exactly which API call failed and why, not which node in your graph entered an error state.

These are classic distributed systems problems, and experienced engineers know how to solve them. But LangGraph's abstractions make applying those solutions harder, not easier.

The Durable Execution Mirage

LangGraph's answer to these challenges is "Durable Execution"—the ability to persist workflow state and resume from checkpoints. This sounds compelling until you examine the implementation.

LangGraph's checkpointing happens automatically under the hood, storing snapshots of workflow state at each step. When a workflow needs to resume, it loads the latest checkpoint and continues execution. This works for simple interruptions, like human-in-the-loop scenarios, but falls short for real production challenges:

No Fault-Tolerant Orchestration: If the execution process crashes, the checkpoint sits in your database, but nothing drives the workflow to completion. You have perfect state persistence with no process to consume it. In distributed systems terms, you have durable storage but no fault-tolerant orchestrator.

Evolution Limitations: As Anthropic discovered while building their research system, evolving workflows under load requires sophisticated deployment strategies like rainbow deployments. Their engineering team had to implement custom solutions because "agents might be anywhere in their process" when you deploy updates. LangGraph's automatic checkpointing actually makes this harder—you can't easily migrate state between schema versions or run multiple workflow versions simultaneously.

Debugging Complexity: When a workflow fails, you don't get a stack trace—you get a state snapshot. Understanding what went wrong requires reconstructing the execution path through your graph, rather than following a clear call stack.

A Better Approach: Proper Durable Execution

The solution isn't to abandon durability—it's to implement it correctly. Durable execution engines like Restate or Temporal provide what LangGraph's checkpointing promises but doesn't deliver: fault-tolerant orchestration of long-running workflows.

These systems understand that the hard problems in distributed computing aren't about graph topologies—they're about reliably executing code in the presence of failures, managing state consistency, and evolving systems under load.

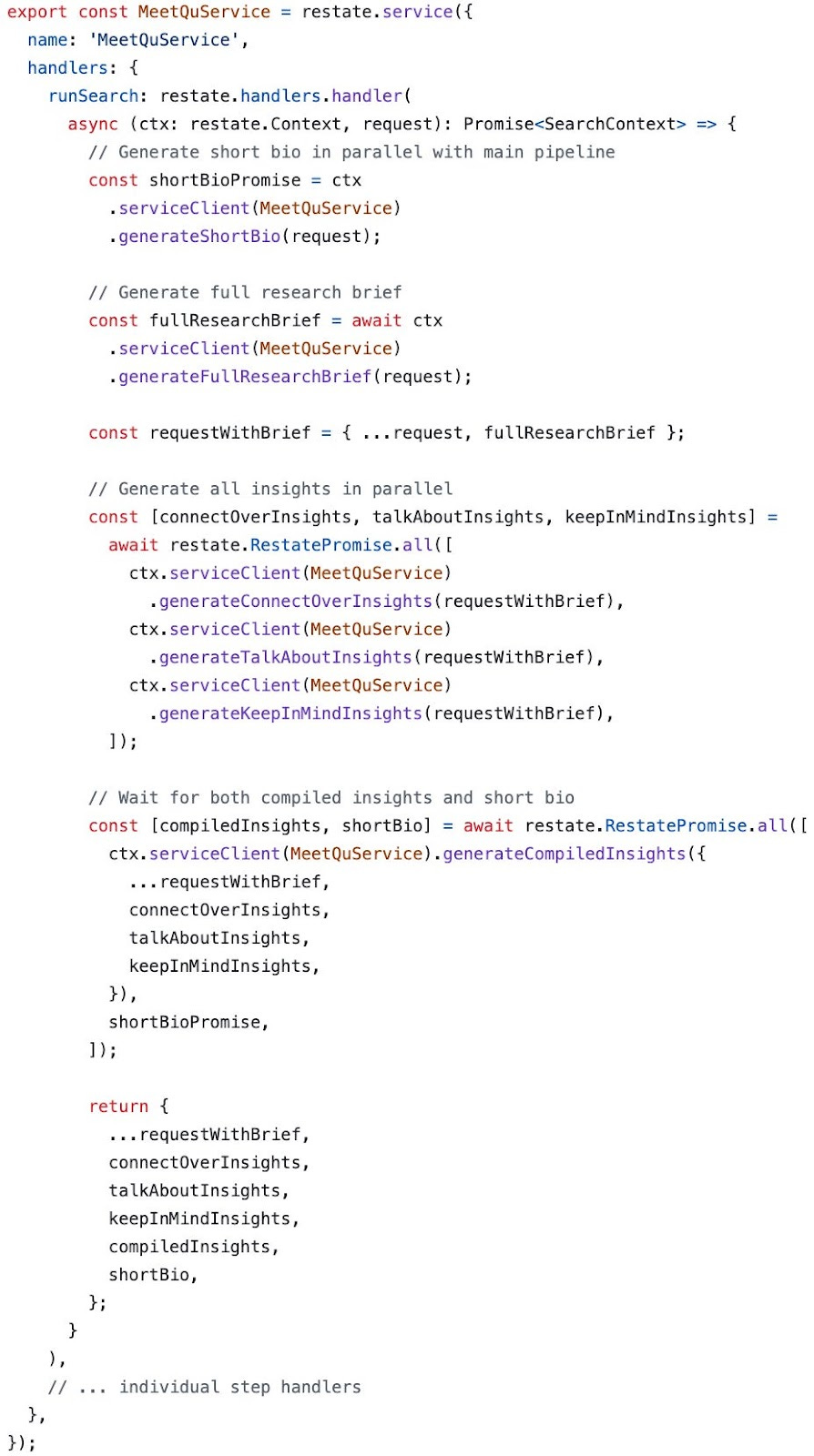

At Pond, we use Restate for MeetQu, our AI-powered meeting preparation tool. Our pipeline processes LinkedIn profiles and generates personalised insights through a 6-step process involving multiple LLM calls and Google searches. Here's how we structure it:

This looks like normal async code because it is normal async code. The difference is that Restate provides durable execution guarantees:

Automatic Recovery: If any node fails, Restate automatically retries from the last successful step

Fault-Tolerant Orchestration: The workflow is driven by Restate's runtime, not vulnerable execution nodes

Observability: Standard logging, tracing, and error handling work exactly as expected

Evolution Support: Deploy new versions while existing workflows continue on the old logic

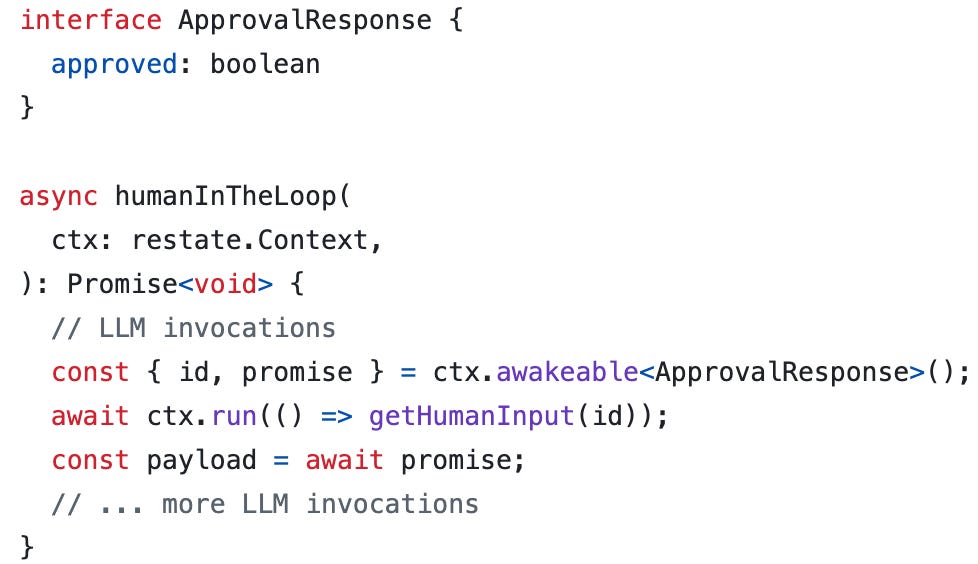

For human-in-the-loop scenarios, Restate's awakeables provide a clean alternative to LangGraph's interrupts:

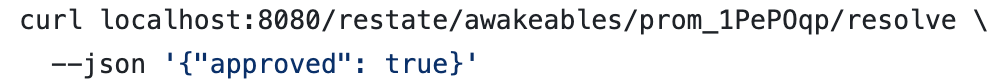

This approach is simpler than LangGraph's interrupt system, which requires understanding Command primitives, checkpoint management, and complex resumption semantics. With awakeables, external systems can resolve the suspended workflow by making a simple HTTP call:

The workflow can suspend for arbitrary periods while waiting for human input, without any state machine complexity.

The Engineering Reality

We still use LangChain under the hood for LLM interactions and Langfuse for observability and prompt management (both integrate smoothly). The difference is that we treat these as libraries, not architectural frameworks. Our code looks like normal TypeScript with async/await, because that's what it is.

This approach has served us well as an early-stage startup with rapidly evolving requirements. We've deployed multiple pipeline versions, added new steps, and changed business logic—all without the migration complexity that LangGraph would impose.

The key insight is that most AI applications are just distributed systems with non-deterministic, relatively slow API calls. The challenge isn't modelling your workflow as a graph—it's applying good distributed systems engineering to a domain with specific characteristics.

Practical Recommendations

If you're building production AI systems:

Start Simple: Use normal async/await code with proper error handling and observability. Most AI workflows are embarrassingly straightforward once you strip away the unnecessary abstractions.

Solve Real Problems: Focus on the distributed systems challenges that actually matter—failure recovery, graceful deployments, observability, and state management. These problems are well-understood and have proven solutions.

Choose Your Abstractions Carefully: If you need durable execution (and you probably do for production AI workflows), use a proper durable execution engine, not checkpointing as an afterthought.

Avoid the Graph Trap: Unless you're actually processing graphs, don't let graph-based frameworks add complexity to your system. The cognitive overhead rarely pays for itself.

Embrace Boring Technology: Your AI application doesn't need novel architectural patterns. It needs the same solid engineering practices you'd apply to any distributed system.

The AI tooling ecosystem has convinced us that building intelligent systems requires complex abstractions. In reality, the intelligence is in the models—your job is to orchestrate them reliably. That's a distributed systems problem, not a graph problem.

As Anthropic's engineering team learned while building their research system: "Multi-agent systems are highly stateful webs of prompts, tools, and execution logic that run almost continuously." The real challenges they faced weren't about graph topologies—they were about "durable execution of code," "resuming from where the agent was when errors occurred," and "preventing well-meaning code changes from breaking existing agents."

Your AI pipeline doesn't need a graph. It needs a runtime that understands the real challenges of production systems: failures happen, requirements evolve, and debugging needs to be straightforward. Build accordingly.

Want to get started with Restate for agentic AI? Check out their recent blog post on the topic and see their AI examples repo.